Artificial Intelligence: A Friend or a Threat?

I remember as a child watching this movie titled “I, Robot”. The movie was mainly about investigating whether a worker at a robotics…

I remember as a child watching this movie titled “I, Robot”. The movie was mainly about investigating whether a worker at a robotics company was murdered by one of the robots. As a child, I found the movie to be scary because the robots possessed similar characteristics to an actual human being, so I actually closed my eyes for some parts of the movie, but one thought that I had in my mind was: Could this ever become a reality? Fast forward to the present: a Hong Kong-based company called Hanson Robotics has since developed a human-like robot named Sophia and activated her in early 2016. It can communicate and actually bond with us, and it’s almost like dealing with an actual human being.

Robots are a form of Artificial Intelligence, also known as AI in short. Nowadays, we can see that AI comes in many forms. Companies like Google and Apple have launched their respective virtual assistants, Siri and Google Assistant, that can help you in many ways, such as linking you to the nearest restaurants or informing you of the current traffic condition. AI has also helped 38-year-old Akihiko Kondo find love. He married Hatsune Miko, a virtual anime character. AI is also incorporated in law enforcement and security systems to detect wanted criminals. Thus, it can be well said that AI has integrated itself so seamlessly into our everyday lives that sometimes we don’t even think about how we use it, from unlocking our phones using face ID to making a quick online bank transaction.

What’s the problem with AI?

From the surface, we could say AI is pretty much flawless. After all, it is making our lives convenient and safer. But as human beings, it is common to be doubtful, so naturally the question “Is that true?” arises. Joy Buolamwini, a researcher in the MIT Media Lab’s Civic Media group, did find a rather controversial flaw in AI; gender and skin-type bias.

1. Gender and Skin-type Bias

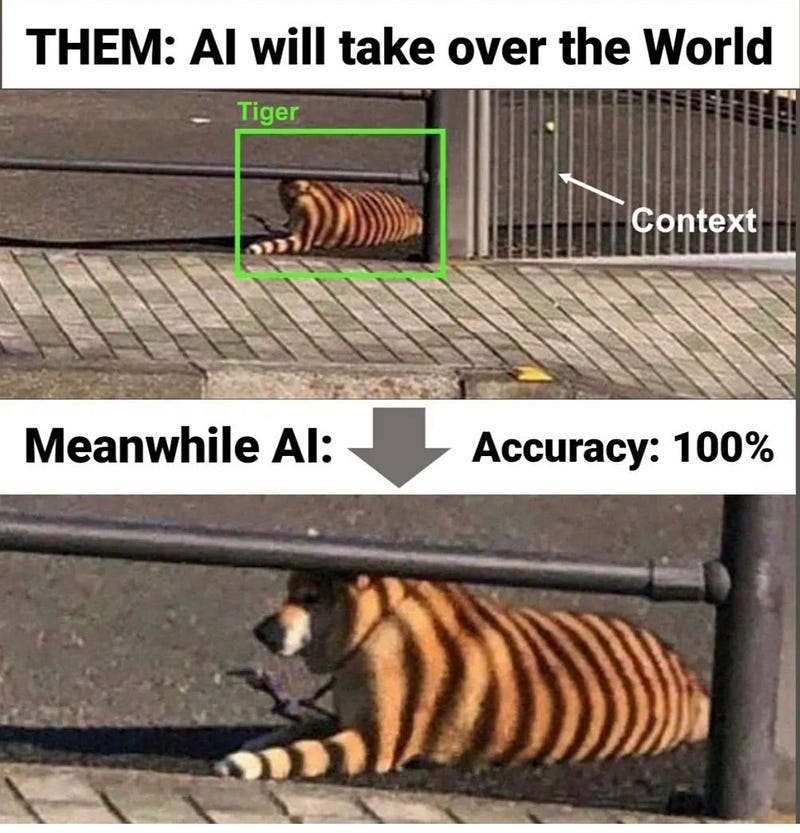

Based on Buolamwini’s research, three commercially-released facial analysis programs from major American technology companies demonstrate skin-type and gender biases. According to an article posted on the MIT News, the results from her research was that the percentage of error in determining the gender of light-skinned men is no higher than 0.8%, but that number jumps to more than 20% in one case and 34% in the other two for darker-skinned women. AI learns to perform computational tasks by interpreting massive data sets. Researchers at a major technology company claimed that their facial-recognition system was more than 97% accurate; however it was found that the participants chosen as their data set to test the aforementioned system consisted of an unbalanced sample, with more males than females, and more of a certain ethnicity than another.

As previously stated, AI is also used in law enforcement, which is known as Algocracy. In the US, authorities have used AI to predict recidivism rates, which is the likelihood of a convicted criminal to commit the same criminal offense again. The software the country uses for this activity is known as Correctional Offender Management Profiling for Alternative Sanctions (COMPAS), developed and owned by Equivant. COMPAS was found to be biased against Black defendants by profiling them to have a higher recidivism rate. To be racially discriminated against under the Court of Law, where everything should revolve around fairness and righteousness, only serves to undermine the very name of justice.

This issue is not just faced in the US. In China, a report published by Mana Data Foundation, a Shanghai-based public welfare foundation and UN women, found systematic sexism, especially against women, in search engines from major Chinese technology companies such as Baidu, Sogou and 360. Words like “CEO”, “engineer”, or “scientist” return mostly images of men, whereas searching keywords like “women” or “feminine” often returns pornographic materials and information about the vagina.

In this way, the social issues clouding our communities at present could be further perpetuated by the use of AI. If gender and skin-type bias in AI are left unaddressed and unsolved, it might possibly affect their understanding. Minor ethnic groups will continue facing discrimination. Through AI, men will also be considered as the only ones who can work as professionals and women as those who might never posses high positions in companies. All the social progress we’re making today might not be useful tomorrow, if nothing is done to change the mechanisms of AI accordingly.

2. Issue with Job Opportunities

The introduction of AI to humankind has made us become more productive by enhancing our problem-solving, reasoning, learning and planning qualities. AI is considered our “assistant”. It is so capable and informative, that some industrial experts have predicted that it could soon take over some human occupations in the future, which can be considered as an issue since it will cause a reduction in job opportunities in certain fields. During an interview with CBS’ news presenter Scott Pelley, Kai Fu Lee, a Taiwanese computer scientist, businessman and writer said that he believes 40% of the world’s jobs will be replaced by robots capable of automating tasks. To be more specific, he stated that both blue collar and white collar professions will be affected, but he believes those who drive for a living will be affected the most. Since the manufacture of AI products requires IT skills, the demand for IT experts will increase.

Nowadays, we see some companies use websites like LinkedIn and ZipRecruiter to employ new workers via the built-in software hiring system. The software scans resumes to look for certain keywords to filter out the best candidates, so the HR department no longer needs to read resumes word by word. This also makes it easy for companies to accept countless applications. The issue with this software is it has no leniency: if certain keywords are not found in the resume, the application is immediately rejected with no consideration of any unique factors that could make certain candidates stand out. Some HR departments of companies heavily rely on this software, and this in turn makes a very limited amount of people getting hired. The recruitment process has lost a human touch — quite literally.

Overall, those who are technologically savvy might not be affected by the change in job opportunities, but those who are not exposed to technology may face the consequences. I think most of us are aware that having access to technology education is a privilege, since not all will be given this opportunity, so those that do not get this chance will be left out and actually have a higher probability of facing unemployability in the future. This, in turn, will lead to a myriad of social issues, most notably an increase in the wealth gap.

3. Cases of Exploitation

Sometimes, the issue is not AI, but it’s the users themselves. As we all know, China is one of the most developed countries in all aspects, including AI. Several advances in the medical fields such as “AI doctor” chatbots and deep learning for medical image processing are being made. In 2017, the Communist Party of China set a goal: to have the nation lead the world in AI by 2030. China’s not alone in setting this goal due to intense competition with the US. Both nations highly focus on conducting research to make advances in this particular field.

Although China may be catching up with the US on paper, in terms of ethics, China may require some improvement. The Chinese government has been receiving global criticism for its harsh treatment towards the Uighurs, an ethnic minority group that mostly lives in the region of Xinjiang. Besides sending them to detention camps, the authorities also use advanced facial recognition technology track and control them via racial profiling.This technology is integrated throughout China’s surveillance cameras. They detect Uighurs based on physical characteristics and once detected, they will keep track of the assumed Uighurs’ movement. And that’s not all: China also took one step further by having a software engineer install an emotion detection system via facial recognition for Uighurs at police stations throughout Xinjiang.

Exploitation of AI is not just a regional issue. Globally, cybercrime is a newly-emerged case that is on the rise. Criminals are now using AI to carry out their malicious activities. A prominent example of this would be abusing deepfakes, which involve the use of AI techniques to modify visual and audio content. Some deepfakes can look authentic and since the public is usually more convinced by visual or audio evidence, rather than just textual evidence, it is a preferable method to use for posting false news on social media or for scams. According to the Wall Street Journal, in 2019, the CEO of an unnamed UK-based energy firm believed that he was on a call with the chief executive of the firm’s German parent company, who was his boss. He called the CEO three times: the first to initiate a transfer of $243,000 to the bank account of a Hungarian supplier, the second to claim it had been reimbursed, and the third to seek a followup payment. It was at the third call the CEO noticed that the reimbursement was falsely claimed and the call had been made from an Austrian phone number, while his boss was in Germany. The CEO had been scammed. The authorities later discovered that the fraudster used a voice deepfake to mimic the chief executive’s voice.

Due to such AI exploitation, society may never live in peace. Minorities like the Uighurs will continue to suffer. If China continues using facial recognition technology in this way, it could usher in a new era of automated racism. Further to this, using the Internet becomes unsafe as we are prone to becoming a victim of cyber crime. Managing our assets has become easier via online banking, but it has also made robbery and scamming easier, because it could be carried out beneath the shadows, with no one knowing the one responsible for it.

The Necessary Steps That Should be Taken

A simple, but far from being good step is to remove the protected classes (ie. race and gender) and delete labels from the data that make AI biased. In programming, a class is written by a programmer in a defined structure to create object(s). For example, you can create a class named “gender”, and in that class you have “male” and “female” as objects. A label is a set of characters coded into AI that is used as an identifier. For example, when AI detects a person who wears a dress, it automatically labels the person’s gender as female. Why is this step far from being good? Removing classes and deleting labels in the data may actually make it difficult for AI to make a decision when encountering with a stimulus (ie. a person), so the results might actually be more inaccurate (the person may be a male, but the AI detects the person to be a female).

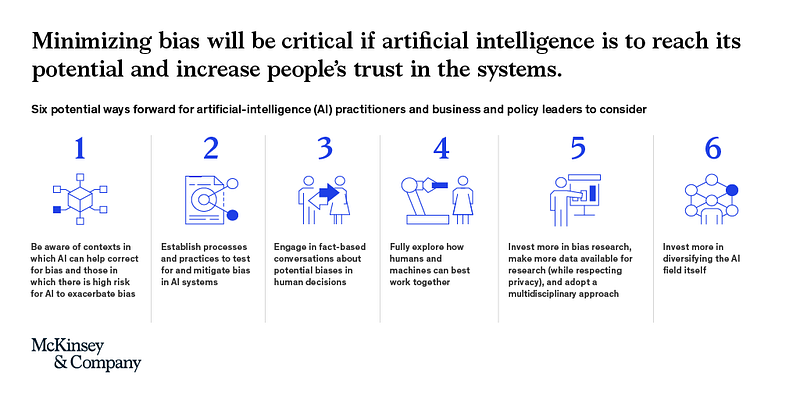

Fully eliminating biases in AI may be challenging, but according to McKinsey & Company, a management consulting firm, reducing biases in AI is a more practical approach:

Regarding the change in job opportunities, there is actually a counterargument to this matter. Even though AI is highly capable, it only performs well under certain conditions. AI does not have instincts like we human beings are blessed with our instincts that can help us decide an appropriate response to every situation. We are able to perform tasks under various conditions, so hypothetically speaking, AI can never replace human beings in most occupations. Despite this, it is undeniable that AI is rapidly getting more integrated into society, so it is worth familiarizing ourselves with the language AI speaks. Learning programming languages such as Java, Python or C helps us gain a very useful skill: coding. This skill is applicable in many occupations, so it would be less challenging to find a job if one equips it. Schools could integrate programming classes as an extracurricular activity or in the syllabus, through fun and interactive lessons. This could help expose children to the IT world from a young age.

Using AI for the collection of the public’s data and information has raised some debate. Even when we create our social media account, our information needs to be collected and stored so our profile can be differentiated from other profiles and we can continue posting content on our own profile. However, this becomes an issue when it is used for the wrong reasons, like stalking individuals such as that done by the Chinese government. It is highly unethical to collect the Uighurs’ data without their consent, let alone doing it for unethical reasons. This is a form of abuse, and it is our role to raise awareness on this so the world leaders can shift their focus on solving such a major problem like this together. Signing petitions, posting on social media, writing articles — whilst these steps may sound cliche, if done collectively as a community, these steps could possibly make an impact.

Besides Law Enforcement and pushing the authorities take action, another effective approach to curb cybercrime is to protect yourself from becoming a victim:

Having an unsecured network makes it easier for criminals to hack your accounts and expose you to emerging malware such as viruses, so subscribe to a full-service internet security site. Brands like McAfee and Norton offer such a service.

Use strong, various passwords. Avoid using the same password for all of your accounts. Yes, I myself admit that it’s troublesome to keep track of multiple passwords, but imagine if someone finds out about your password. That person basically has access to all of your data and assets. There are several ways to make keeping track of them much easier, such as typing them down on Excel or using a password management software. What’s important is to not tell others which method you personally use.

Know what to do if you become a victim. Lodge a police report. If you become a victim of a cyber crime that involves finance, remember to obtain your bank statements and transaction history, as they may help the authorities find the perpetrator.

AI is predicted to not cede the spotlight anytime soon. More conglomerates are investing in AI because they see the potential for rapid growth financially and economically through the use of AI. According to Analytics Insight, as of August 2021, IBM holds as many as 3594 AI-related patents. AI has made flying safer and more efficient. Modern airliners are equipped with a software that can control the plane when it’s cruising, so pilots can actually take breaks throughout a flight. According to Interesting Engineering, scientists are also finding a way to develop an algorithm that predicts if and when a patient could die of cardiac arrest.

In my personal opinion, AI is our friend, given we use it the right way, for the right reasons. AI definitely has its own flaws, so it is our responsibility to continue doing thorough research and to find a way to make AI more human-friendly.

[Written by: Amirul Haziq bin Amri. Edited by: Siow Chien Wen]